Walkthrough of an end to end example using API calls

This page walks through an example of using the Gigasheet API to upload data, filter it, and export the filtered results.

Each step uses API calls that are linked to the documentation page for that API call, where you can try out each call yourself interactively in the browser.

You can also see examples in Python in the Python SDK at https://github.com/gigasheetco/gigasheet-python/tree/main/examples .

A. Load your data

- Upload your data to a cloud storage solution and create a presigned link. For S3 on AWS, that's Uploading to S3 and Sharing with a presigned link.

- Call POST /upload/url using the presigned link as the

urlparameter to load your data into Gigasheet. Note the sheet handle in theHandlefield of the response.

Example request:

curl --request POST \

--url https://api.gigasheet.com/upload/url \

--header 'X-GIGASHEET-TOKEN: XXXXXX' \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--data '

{

"name": "My Dataset",

"url": "https://my-bucket.s3.us-east-1.amazonaws.com/config.xml?response-content-disposition=inline&X-Amz-Security-Token=really-long-thing-will-be-here"

}

Example response:

{

"Success": true,

"Message": "File entry created",

"Handle": "6030253a_ffd2_42b5_b1a2_fe914d4e7db7", # <-- sheet handle

"Error": null

}

- Poll GET /dataset/{handle} with the handle of the sheet, inspecting the

Statusfield of the response. The status will go fromloadingtoprocessingtoprocessed. Poll until the status becomesprocessed.- NOTE: If there is a problem parsing your file, status will be

errorinstead. In this scenario, you can find more information about the problem in theDetailedStatusfield of the response.

- NOTE: If there is a problem parsing your file, status will be

Alternative: If you do not want to use a cloud storage, you may be able to use /upload/direct to upload your data directly from your local disk. Note that you may need to do it in multiple steps using the targetHandle parameter as there is a limit on how much data can be sent at once.

B. Filter your data

-

Visit the Gigasheet Web Application at app.gigasheet.com and log in. You should see the data from Section A already present as a file in your library. Click the library entry to open the file.

- NOTE: If you do not wish to use the Gigasheet Web Application, see "Filter Model Structure" in the Filter Model Detail Guide for details on how to construct a filter model from scratch. However, it is recommended to use the Web Application as a starting point for creating a filter that you can then reuse repeatedly in your API calls.

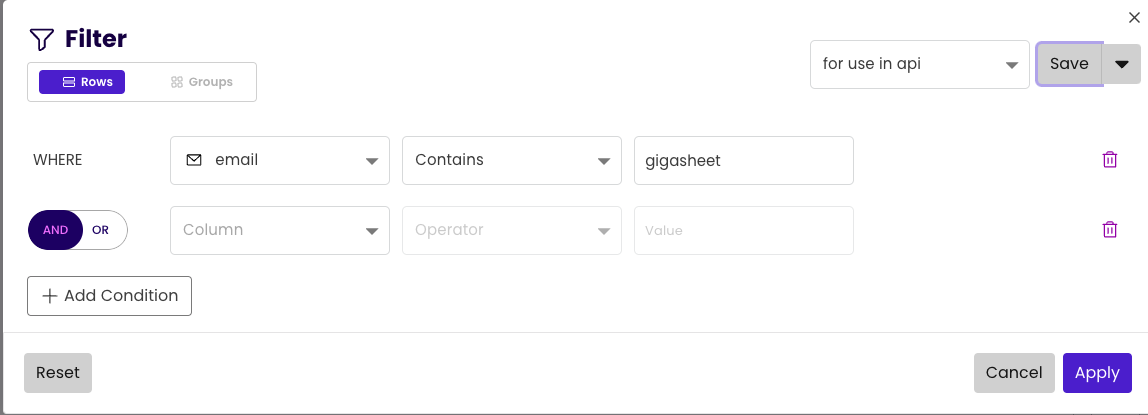

-

Use the Gigasheet Web Application user interface to create a filter with the data you want and then save the filter as a Saved Filter.

- Call GET /filter-templates and look at the

Namefields in the response to find the handle of your saved filter from step 2.

Example request:

curl --request GET \

--url https://api.gigasheet.com/filter-templates \

--header 'X-GIGASHEET-TOKEN: XXXXXX' \

--header 'accept: application/json'

Example response:

[

{

"Name": "for use in api",

"CreatedAt": "2023-07-17T17:20:58.713074Z",

"Id": "ac1c961f_2c3f_43d3_b142_510621b20909", # <-- saved filter handle

...

}

]

- Call GET /filter-templates/{filter-handle}/on-sheet/{handle} with your filter handle from step 3 and your sheet handle from section A. The

filterModelfield of the response will be a Json object that is the filter model applied to the sheet, which you will use when you create the export.

Example request:

curl --request GET \

--url https://api.gigasheet.com/filter-templates/6030253a_ffd2_42b5_b1a2_fe914d4e7db7/on-sheet/ac1c961f_2c3f_43d3_b142_510621b20909 \

--header 'X-GIGASHEET-TOKEN: XXXXXX' \

--header 'accept: application/json'

Example response:

{

"name": "for use in api",

"id": "ac1c961f_2c3f_43d3_b142_510621b20909",

"sheet": "6030253a_ffd2_42b5_b1a2_fe914d4e7db7",

"owner": "[email protected]",

"updatedAt": "2023-07-17T17:21:06.273822Z",

"createdAt": "2023-07-17T17:20:58.713074Z",

"filterModel": { # <-- next section will use the filter model from here

"_cnf_": [

[

{

"colId": "D",

"filter": [

"gigasheet"

],

"filterType": "text",

"isCaseSensitive": false,

"type": "containsAny"

}

]

]

}

}

For more information about filters, see the Filter Model Detail Guide.

C. Export your data

- Call POST /dataset/{handle}/export with the sheet handle you want to export. Send the result of Section B as the

filterModelvalue inside thegridStateparameter. This will begin generating an export of your data with the filter applied. Note the export handle in thehandlefield of the response.

Example request:

❯ curl --request POST \

--url https://api.gigasheet.com/dataset/6030253a_ffd2_42b5_b1a2_fe914d4e7db7/export \

--header "X-GIGASHEET-TOKEN: XXXXXX" \

--header 'accept: application/json' \

--header 'content-type: application/json' \

--data '

{

"gridState": {

"filterModel": {

"_cnf_": [

[

{

"colId": "D",

"filter": [

"gigasheet"

],

"filterType": "text",

"isCaseSensitive": false,

"type": "containsAny"

}

]

]

}

}

}

'

Example response:

{"handle":"ef52f6f1_2cf6_4eb3_ba3e_2221020ad380"} # <-- handle of the export

- Poll GET /dataset/{handle} with the handle of the export, inspecting the

Statusfield of the response. The status will go fromloadingtoprocessingtoprocessed. Poll until the status becomesprocessed.- NOTE: If there is an issue with your export, status will be

errorinstead.

- NOTE: If there is an issue with your export, status will be

- Call GET /dataset/{handle}/download-export with the handle of the export from step 1 of this section (NOT the handle of the sheet). The response will include a field

presignedUrlthat is a temporary presigned public URL of your export.

Example request:

curl --request GET \

--url https://api.gigasheet.com/dataset/ef52f6f1_2cf6_4eb3_ba3e_2221020ad380/download-export \

--header 'X-GIGASHEET-TOKEN: XXXXXX' \

--header 'accept: application/json'

Example response:

{

"presignedUrl": "https://gigasheet-export-uploads.s3.amazonaws.com/ef52f6f1_2cf6_4eb3_ba3e_2221020ad380-20230717174848.zip?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAXTOLCDI7G5IZZAUQ%2F20230717%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20230717T174914Z&X-Amz-Expires=1800&X-Amz-SignedHeaders=host&response-content-disposition=attachment%3B%20filename%3D%22fake-names-emails_exported%20export.zip%22&X-Amz-Signature=a18080a1fed614bd9f903ee4c2b1ce1bbd7090ee3a4dde4bf0713237617eff04",

"error": ""

}

- Download the contents of the presigned URL from step 3. This is a zipped CSV file of your filtered data.

Example commands in a shell for downloading the exported data:

❯ presigned='https://gigasheet-export-uploads.s3.amazonaws.com/ef52f6f1_2cf6_4eb3_ba3e_2221020ad380-20230717174848.zip?X-Amz-Algorithm=AWS4-HMAC-SHA256&X-Amz-Credential=AKIAXTOLCDI7G5IZZAUQ%2F20230717%2Fus-east-1%2Fs3%2Faws4_request&X-Amz-Date=20230717T174914Z&X-Amz-Expires=1800&X-Amz-SignedHeaders=host&response-content-disposition=attachment%3B%20filename%3D%22fake-names-emails_exported%20export.zip%22&X-Amz-Signature=a18080a1fed614bd9f903ee4c2b1ce1bbd7090ee3a4dde4bf0713237617eff04'

❯ curl "$presigned" > data.csv.zip

% Total % Received % Xferd Average Speed Time Time Time Current

Dload Upload Total Spent Left Speed

100 3020 100 3020 0 0 1236 0 --:--:-- --:--:-- --:--:-- 1274

❯ unzip data.csv.zip

Archive: data.csv.zip

inflating: gigasheet data load export.csv

❯ cat 'gigasheet data load export.csv' | head -n 2

"first_name","last_name","email","email - Format","email - Domain"

"John","Smith","[email protected]","Correct","gigasheet.com"

You have now successfully loaded, filtered, and exported data with Gigasheet!